u-Bat: Ultra-low Power Acoustic Classifier for Edge Devices

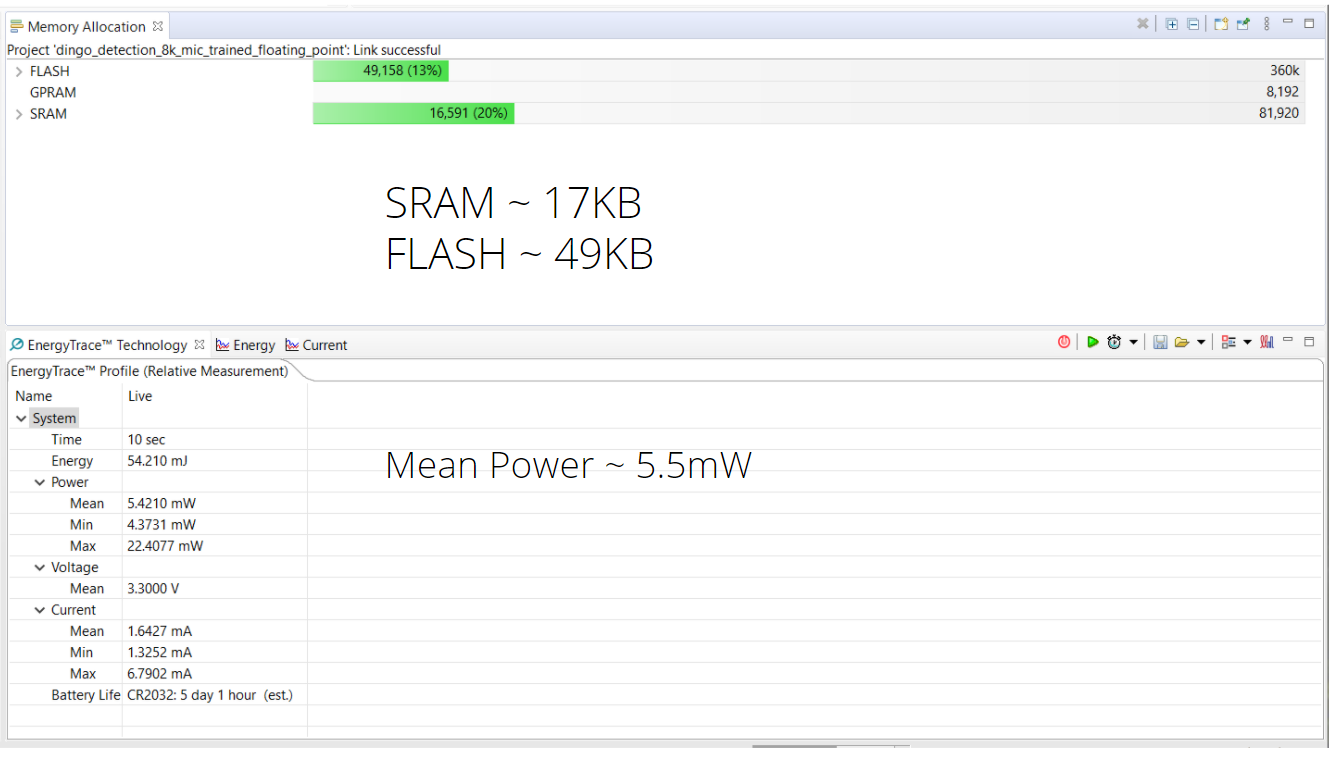

u-Bat (earlier ULP-ACE) aims to design a deployable system at edge to detect and classify the audio signatures with ultra-low power, light accoutic classifier. We have used TI's CC1352 as a demonstration chip which has ARM Cortex M4F processor and supports TI-RTOS. The application consumes 49KB of FLASH, 17KB of SRAM and 5.5mW of MEAN POWER which is measured with Energy Trace (powered by TI) for Dingo detection on edge.

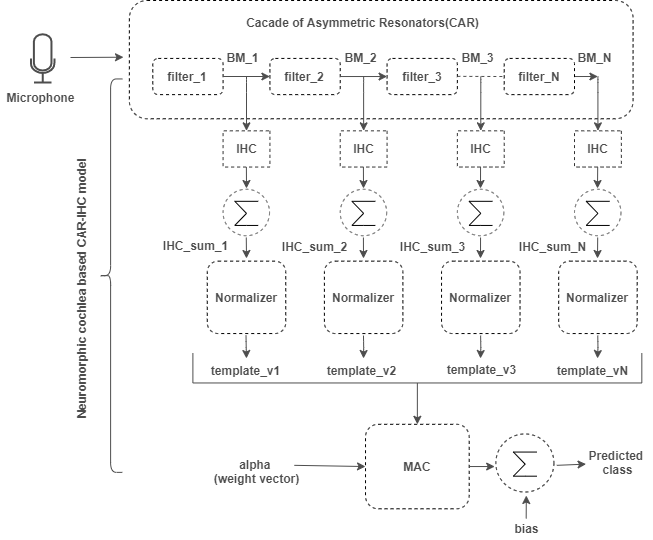

In-filter Computing: Template SVM

The In-filter computing performs certain amount of pre-processing of audio to extract salient features which will be used to train and test the SVM model. The audio samples passes through the CAR block which are band pass filters, output of these CAR filers are the frequency components present in corresponding filter frequency band for every sample, This component pases thrugh IHC blocks and summed for Fs samples (1 second duration), this summed energy component will be normalized in the range of 0 to 1 and this computed template output vector of normalized block after processing for every 1 second duration will be passed next to train and test the model. For different application, tunable parameters such as sampling frequency, low and high threshold of interested frquency band, number of cascaded filters and selection of range of these cascaded filters (channels) to train the model will be adjusted according to interested frequency bands of targeted classes. We use one-against-all (OAA) approach for the training. The dataset used for the training should be recorded through the microphone to generalize the effects of microphone channel in the training.

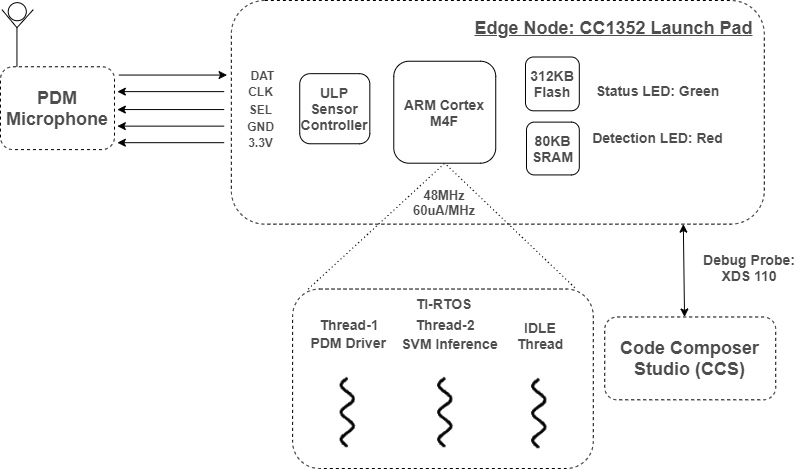

System Implementation

The Template SVM algorithm is implemented on TI's CC1352 chip (LAUNCHXL-CC1352R1) which has ARM Cortex M4F processor working at 48MHz. We have leveraged the TI-RTOS features in-order to have fine-control over the pooling of hardware resources, power and memory optimizations.

Adafruit PDM MEMS Microphone is used to capture the audio signals and it is interfaced with PDM drivers (Thread 1) and programmed to work at 1MHz sampling rate, decimated to 8KHz to get the PCM samples of the respective audio, these PCM samples are fed into accoutics classifier running on a different thread (Thread 2) using TI-RTOS. Since we have a single ARM core, these two threads are switching between each other, to eloborate, microphone collects 32 samples at one go, the accoutics classifier processes these 32 samples and goes to IDLE state before next 32 samples arrive. This process goes on real-time and after 8k samples are processed, a binary decision is made.

Current Work and Future Aspirations

- Optimizations with respective memory and power to increase the sustainability.

- Fixed point and with the use of CMSIS-NN, till now we did with floating point precision.

- Automated framework for training, testing and inferencing on edge.

- Use sensor controller studio to create a wake-up system to avoid device to be on always and push power requirement further down and also, use ULP microphone sensor on the board

- Increase the reliability and robustness of the device.

- A custom board with application specific hardware with communication stack, energy harvesting incorporated in it.

Memory and Power:

DEMO: Dingo Detection on Edge

*The red LED indicates the sound of Dingo has been detected*

References

- H.R. Sabbella , A.R. Nair, V. Gumme, S.S. Yadav, S. Chakrabartty, C.S. Thakur, “An Always-On tinyML Acoustic Classifier for Ecological Applications.” IEEE ISCAS 2022

- Hemanth Reddy Sabbella ”Bird Hotspots: A tinyML acoustic classification system for ecologicalinsights.” tinyML Asia Technical Forum, 2021.

- A.R. Nair, S. Chakrabartty, C.S. Thakur, “In-filter Computing For Designing Ultra-light Acoustic Pattern Recognizers“, IEEE IoT Journal.